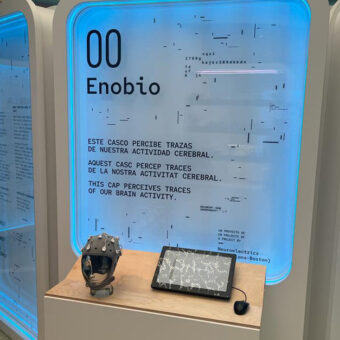

I would like to comment on two of my favourite application fields of Machine Learning, namely Computer Vision and Brain-Computer Interfaces. Some groups are currently working on its combination. The background idea is that you combine the capability for finding out visual patterns in images of both modern Computer Vision algorithms and of the human brain. Although Computer Vision algorithms have impressively evolved in recent times, they are still not capable of mimicking the robustness and processing time of the human brain in recognizing and understanding visual patterns and objects. With their combination you get the best of both worlds.

Cortically coupled Computer Vision

This originated with a work by Lucas Parra and colleagues[1] where they tried to optimize the analysis of satellite images. Traditionally a human operator sat in front of a computer screen sequentially analysing satellite images looking for surface-to-air missile (SAM) sites. As you can imagine this is a very tedious task. So they had the brilliant idea of semi-automating the process by analysing the EEG of such an operator. They find out it was possible to detect when the operator has detected a target by detecting the event-related potential denoted as P300 on the EEG signals.

As you might know if you have been following this blog regularly the P300[2] is a positive peak[3] in the EEG signal that appears 300 ms after a human shifts their attention to a particular stimuli. This wave is related to the attentional and memory systems. The idea was originally proposed by Hideaki Touyama, who proposed a system for photo retrieval using this mechanism[4]. Following these works principally the group of Prof José Millán[5] at EPFL is trying to successfully exploit this type of systems.

Rapid Visual Presentation Protocol

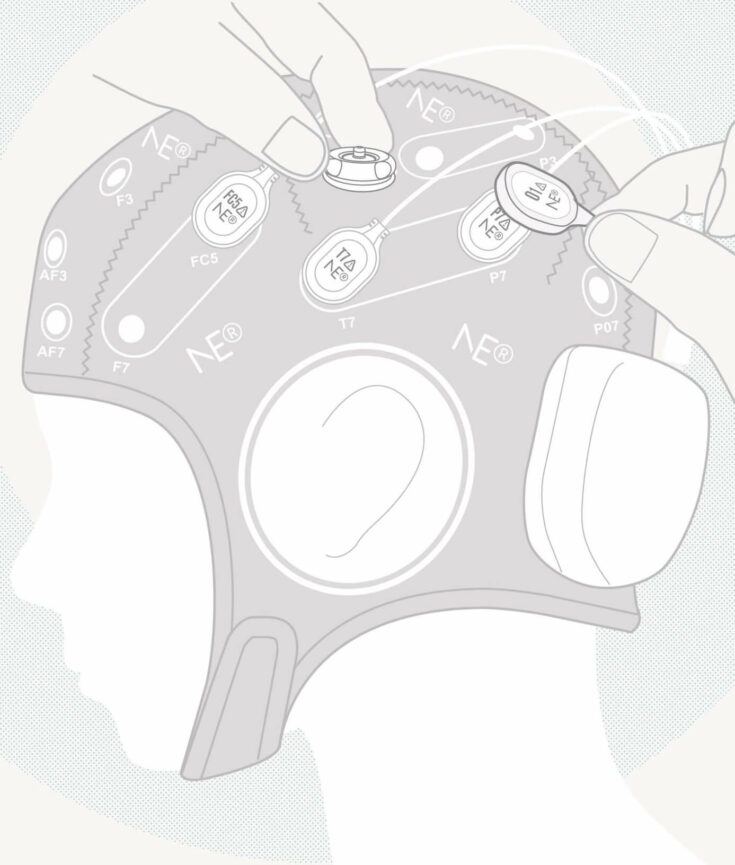

We at Starlab are also working in this application field. We follow the so-called Rapid Serial Visual Presentation (RSVP) protocol whereby a sequence of images is presented to the subject. Such a paradigm has been used for instance for the elicitation of P300 in Brain-Computer Interface systems. In the protocol we present first the so-called target image to the subject. After she has memorized it, a sequence of images including the target image is shown several times where the images are randomly sorted. The appearance of the target image is expected to elicit the P300 waveform with a peak around 300-400 ms. Curiously enough it looks like there is a relationship between the latency of the peak and the complexity of the analysed image. We use a so-called inter-stimuli interval, i.e. the time lag between two consecutive images, of 100 ms, although you can find works in the literature where even 50 ms have been used.

Unexpected surprises and Machine Learning goal

If you filter the signal and average all the sequences corresponding to target images you will see the P300 peak emerging. The more sequences you average, the more clear is the difference between the waves corresponding to target and non-target images. One unexpected surprise after this pre-processing is that you can find an oscillation in the averaged sequences. This oscillation corresponds to the presentation frequency. So if you use an inter-stimuli interval of 100 ms, you find a 10Hz oscillation that can even mask the P300. This is a steady-state response similar to the one used in Steady-State Visual Evoked Potentials[6] (another BCI modality). You get rid of it by randomizing the inter-stimuli interval. I was mostly surprised by the fact that no work in the literature mentions this effect.

The goal of a Machine Learning system is to correctly classify sequences corresponding to target versus those corresponding to non-target images. The goal is to try to achieve this each time a target image appears, which is called in the field as single-trial classification. This is a tough task for a Machine Learning algorithm! I will be happy to share our results on this application in the near future.

[1] bme.engr.ccny.cuny.edu/faculty/lparra/publish/tnsre2006-c3v.pdf

[2] https://blog.neuroelectrics.com/blog/bid/323640/Quick-Tips-for-P300-Detection

[3] https://blog.neuroelectrics.com/blog/bid/318938/14-Event-Related-Potentials-Components-and-Modalities

[4] http://dl.acm.org/citation.cfm?id=1522930

[5] http://cnbi.epfl.ch/page-34063-en.html

[6] https://blog.neuroelectrics.com/blog/bid/277640/SSVEP-based-BCI-managing-your-favorite-music-playlist