In his blog post a few weeks ago Alejandro Riera talked about characterizing stress based on EEG[1]. The post presented the generation of EEG features based on ratios and differences at particular frequency bands. I would like to comment today on the second part of the story, that goes from such features to the classification of activities upon the stress level. This can have applications like the one recently presented[2]. The general framework was somehow introduced by Anton Albajes-Eizagirre in his post on machine learning applied to affective computing[3]. He presented the general framework, so I would like to comment on the experimental results we have obtained with a particular dataset by using data fusion[4]. The work can be found in detail in our paper Electro-Physiological Data Fusion for Stress Detection [5].

The multimodal setting for stress characterization

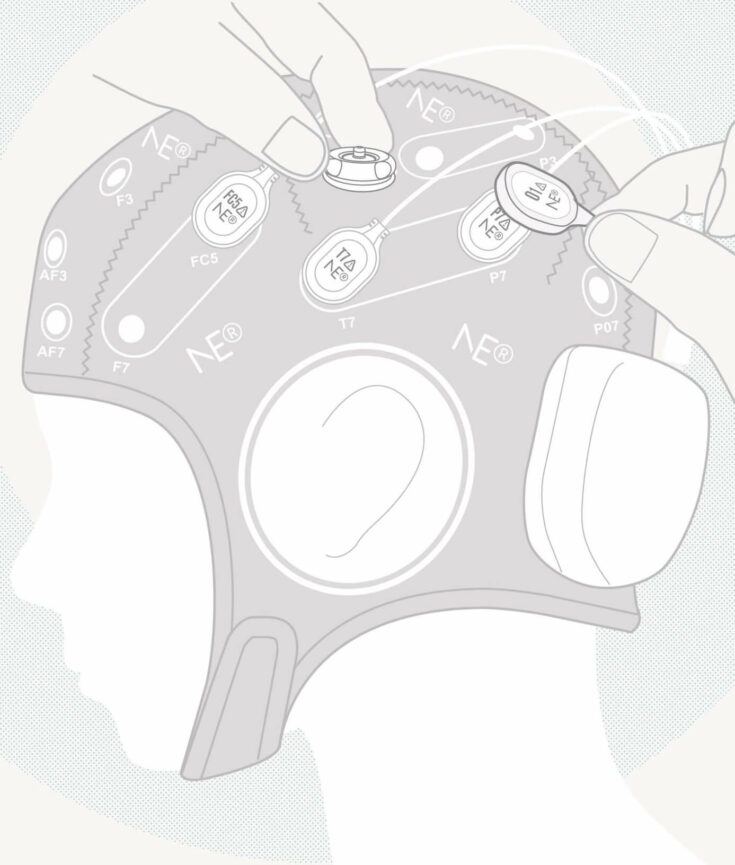

The purpose of the system is to fuse the data of separate information channels, namely EEG, and EMG data. We achieve the classification of stress-related tasks, e.g. mathematical computation, a fake blood extraction, vs tasks not related to stress, e.g. relaxing, reading. The idea is that by using these two electrophysiological modalities we would be able to determine if a subject is suffering from stress. We extract different features from the two modalities and then apply fusion operators in order to characterize the stress level of the task. We take into account the EEG channels reported in the literature to be the most related with valence and arousal, namely those in the frontal part. Therefore we select the pairs F3-F4, and F7-F8. We computed here the alpha asymmetry, and the alpha-beta ratios, so that we have 6 EEG-based features. Moreover, we take into account the EMG energy on the zygomatic and the corrugator facial muscles. Facial EMG is known to be a good mean to monitor facial expressions and therefore emotional characterization. This gives us a system based on 8 features delivered each second with an analysis window of 2 seconds length.

Data Fusion delivering a stress marker

After normalizing the time-dependent features in the interval [0,1], we deliver them to a fusion operator. We select for this implementation the so-called Sugeno Fuzzy Integral[6]. This is a generalization of the average based on the utilization of max and min operators. The result of the fusion operation is a real number in the interval [0,1], which corresponds to the score of the multimodal data being generated during a stress task, i.e. equivalent to a stress marker suffered by the subject when realizing the task. This score is the output of our system.

How good are we at detecting stressful tasks?

For the performance evaluation we use a 5-cross-fold validation[7] procedure. Therefore we split the data in 5 subsets, which means we are using 80% of data for training and 20% for test and repeating this procedure 5 times. Finally we compute the True Positive (TPR) and the False Positive Rates (FPR)[8] by using the stress task epochs as the positive class to detect. The TPR is the percentage of right detected stressful tasks. The FPR is the percentage of relaxing tasks that are wrongly detected as stressful ones. Since the result of the fusion operator is a real-valued number, we have to apply a threshold in order to make a decision. The threshold determines how many right decisions and errors we are making as expressed by the measures TPR and FPR. For different values of the decision threshold you obtain different TPR-FPR values and you can plot them therefore in a graph as you can see in the attached Figure.

We test the system with data from 12 subjects (denoted as S1 to S12). As can be observed we obtain excellent performance for 3 out of 12 subjects (with right decisions in 97% of the tasks). The minimal achieved performance is around 83% of the tasks for 2 of the subjects. The average right detection is done in 91.7% of the tasks. This is in summary an excellent performance for a real-time system delivering a decision every 2 seconds as the one we have implemented. Can you imagine your computer telling you to take a rest when it detects that you are getting too stressed?

[1] https://blog.neuroelectrics.com/blog/bid/326289/Stress-and-EEG

[2] http://neurogadget.com/2014/02/11/mindrider-bike-helmet-flashes-cyclists-emotions-maps-stressed/9849

[3] https://blog.neuroelectrics.com/blog/bid/265970/Machine-learning-applied-to-affective-computing-or-how-to-teach-a-machine-to-feel

[4] https://blog.neuroelectrics.com/blog/bid/223238/What-the-hell-is-Data-Fusion

[5] http://www.ncbi.nlm.nih.gov/pubmed/22954861

[6] http://en.wikipedia.org/wiki/Sugeno_integral

[7] https://blog.neuroelectrics.com/blog/bid/286066/How-Good-is-my-Computational-Intelligence-Algorithm-for-EEG-Analysis

[8] http://en.wikipedia.org/wiki/Receiver_operating_characteristic