In our last rendezvous on, ‘Affective computing (or how your PC will know how you feel)‘, I introduced different methodologies for emotion detection that configure the state- of- the- art. Methodologies for emotion detection from face expressions and from body gestures were exposed. Also methodologies from physiological signals, including EEG, galvanic skin response, heart rate variability and speech were exposed.

In his last post, named ‘How to understand the machine learning family’, Aureli Soria-Frisch gave a clear overview on the different approaches to machine learning that can be considered. And in a dedicated post titled, ‘What the hell is data fusion?’, he introduced a particular tool of machine learning that will also play a big role on our discussion today: data fusion.

Now that all the required ingredients have been refreshed, let’s discuss today how machine learning can be applied to affective computing. In my last post I already talked to you about one of the projects we are currently working on, the Beaming project. In this project, we are applying machine learning techniques to develop advanced methodologies for emotion detection. Obtaining data from an immersive multi sensory environments like are the Beaming scenarios, we will use data fusion techniques to fuse the results of several emotion detection methodologies from different sources in order to obtain a joint emotion detection that results on in a more strong and robust detection.

The idea behind it is that information provided by different sources may be complementary and fusing it will result ion a more accurate detection. For example, the detection resulting from one specific facial expression may be undetermined between different emotions, and by adding the result of detecting the mental state from EEG data may dismiss incorrect emotions. Following this idea, we are working on the implementation of a data fusion scheme that fuses combines the result of emotion detection methodologies from EEG, visual, HRV and galvanic signals.

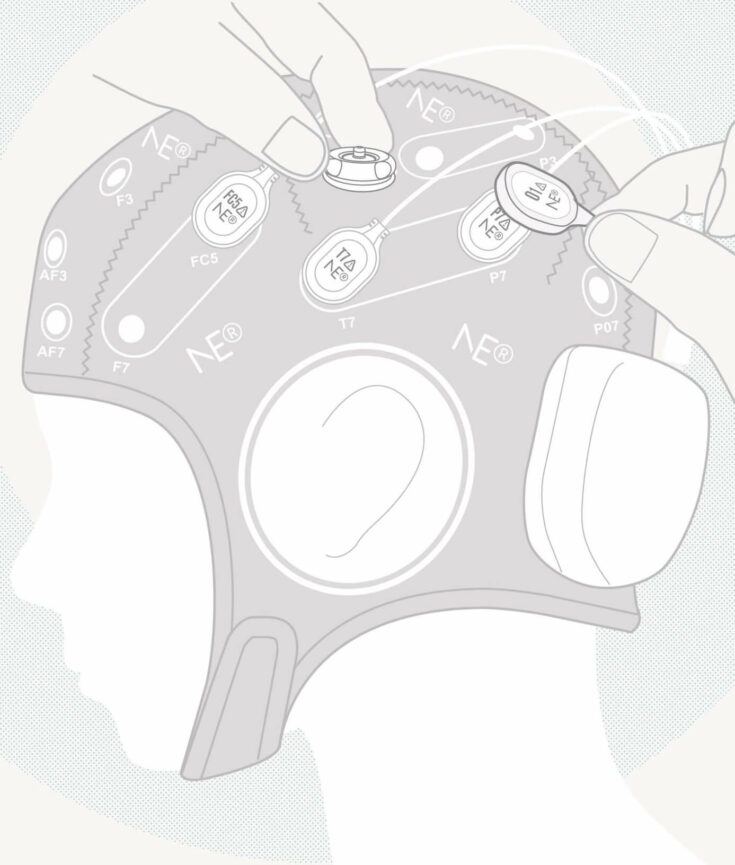

Another application of machine learning for emotion detection we are developing within our works on the Beaming project is the selection of channels and features for the emotion detection from EEG. In the blog post titled ‘How to squeeze machine learning for EEG data analysis’, Aureli Soria-Frisch already presented different applications of machine learning tools for feature selection, and in this post I want to explain how we are researching to improve the methodologies I presented in ‘Emotion recognition using EEG‘.

Using evolutionary algorithms, we are selecting which channels combinations provide the best performance while used applied to the methodologies presented on the aforementioned previously post. For example, for the alpha asymmetry index, different channels can be selected on each hemisphere. Also, within each hemisphere, the selected channels can be weightily averaged. For this example, we use two machine learning tools: aggregation operators (a data fusion operator) for averaging among channels and a genetic algorithm for channel selection.

But we don’t want to just restrict ourselves to the usage of some particular aggregation operations: we want to explore different data fusion operators and how to combine them to generate new EEG features. Therefore, we will use another machine learning tool: a particular type of evolutionary algorithm called genetic programming (GP). We will research the application of different operators and the possible combinations among them. The selection of operators, their application schemes (like order, parameters, etc.), and even the selection of inputs (EEG channels or even simpler features) will be explored and determined using genetic programming.

All in all, today I wanted to present you the opportunity that the Beaming project offers to develop a practical application of machine learning techniques to a particular area of affective computing: emotion recognition. This is actually a work in progress, with exciting results to come. Stay tuned and don’t miss what’s next!

Photo credits: Steve Burnett and S. Jähnichen