Artificial Neural Networks (ANN) are machine learning models that have been inspired by the brain functioning. Through my next posts I will try to introduce artificial neural networks in a simple high level way, highlighting its capabilities but also showing its limitations. In this first post, I will introduce the simplest neural network, the Rosenblatt Perceptron, a neural network compound of a single artificial neuron. This artificial neuron model is the basis of today’s complex neural networks and was until the mid-eighties state of the art in ANN.

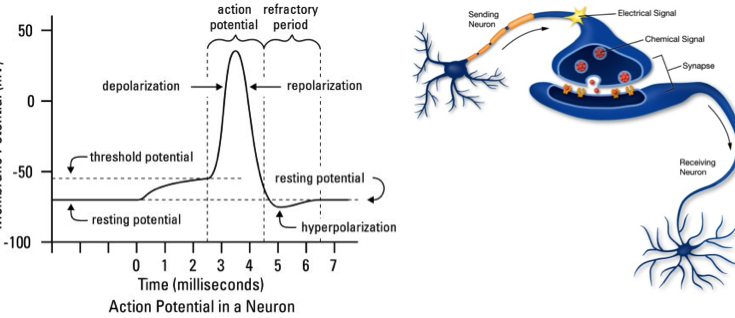

As ANN are inspired by the brain, let’s start describing how the brain works. The brain is a connected network of neurons (approximately 21*10^9 ) that communicate by means of electric and chemical signals through a process that is known as synapse, in which information from one neuron flows to other neurons. When a neuron is inactive, the electrical difference across the membrane of the neuron (resting potential) is typically around –70 mV. The electrical impulses received from other neurons connected to its axon delivers neurotransmitters that can be both inhibitory or excitatory. If excitatory neurotransmitters increase the membrane voltage and it reaches a certain threshold the cell depolarizes and triggers the action potential that travels from the dendrite to other neurons axons. Communication among neurons therefore takes place when the action potential arrives to the axon terminal of other presynaptic neuron.

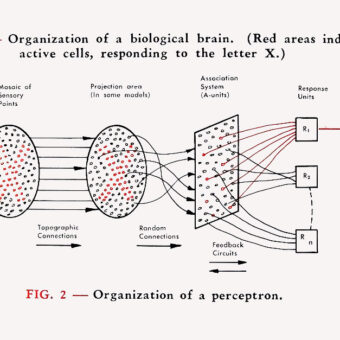

Inspired by the biological principles of a neuron, Franck Rosenblatt developed the concept of the perceptron at Cornell Aeronautical Laboratory in 1957:

- A Neuron receives ‘communication messages’ from other neurons in form of electrical impulses of different strength that can be excitatory or inhibitory.

- A neuron integrates all the impulses received from other neurons.

- If the resulting integration is larger than a certain threshold the neuron ‘fires,’ triggering the action potential that is transmitted to other connected neurons.

Frank Rosenblatt’s perceptron model

Rosenblatt perceptron is a binary single neuron model. The inputs integration is implemented through the addition of the weighted inputs that have fixed weights obtained during the training stage. If the result of this addition is larger than a given threshold θ the neuron fires. When the neuron fires its output is set to 1, otherwise it’s set to 0.

The equation can be re-written as follows including what it’s known as the bias term: .

This model implements the functioning of a single neuron that can solve linear classification problems through very simple learning algorithms. Rosenblatt Perceptrons are considered as the first generation of neural networks (the network is only compound of one neuron ☺ ). This simple single neuron model has the main limitation of not being able to solve non-linear separable problems. In my next post I will describe how this advantage was overcome and what happens when we have a layer of various perceptrons or try different neuron activation functions. Stay tuned!

Credits:

Neural networks for pattern recognition, Cristopher Bishop

Suárez, J.R. Dorronsoro, A. Barbero, Dpto. de Ingeniería Informática and Instituto de Ingeniería del Conocimiento, Escuela Politécnica Superior de Madrid – ASDM 2016

Image Credits 1: http://learn.genetics.utah.edu/content/addiction/neurons/

Image Credits 2: Wikipedia.org