Prepare to embark on an exciting journey into the world of neural networks, at the heart of which lies the Rosenblatt Perceptron. This single artificial neuron ignited the spark of innovation, shaping the intricate neural networks we marvel at today. These networks have redefined machine learning by drawing inspiration from the workings of the human brain.

The Rosenblatt Perceptron, a basic neural network consisting of a single artificial neuron, has been the basis of today’s complex neural networks. These have revolutionized machine learning, drawing inspiration from the brain’s intricate workings.

We’ll delve into the foundational aspect of ANNs – the Rosenblatt Perceptron – shedding light on its significance and limitations within the broader neural network landscape.

What is an Artificial Neural Network?

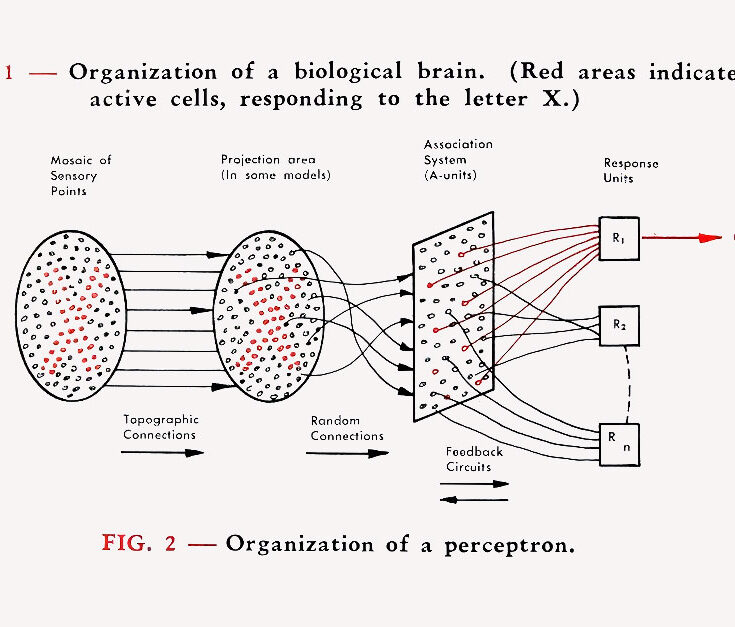

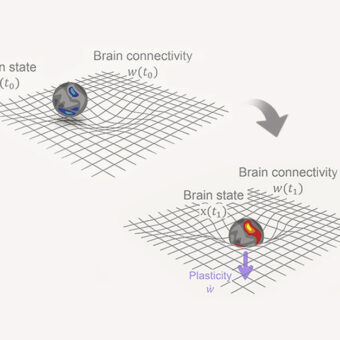

Before we dive into the Rosenblatt Perceptron, let’s first unravel the captivating blueprint: the brain’s network of neurons. This intricate web, comprising billions of neurons, communicates through electric and chemical signals, a process known as a synapse. Neurons operate with varying potentials and neurotransmitters, collectively generating the mesmerizing dance of cognition.

ANNs are composed of interconnected layers of nodes, encompassing an initial input layer, one or more concealed layers, and a final output layer. Each node, or artificial neuron, establishes connections with other nodes and is characterized by an associated weight and threshold. When the output from a given node surpasses the predefined threshold, that particular node becomes activated, thereby transmitting data to the subsequent layer of the network.

Neural networks enhance their accuracy through training data. Once finely tuned, they become potent tools for swift data classification and clustering in computer science and AI. Tasks like speech and image recognition are very fast compared to manual human identification. Notably, Google’s search algorithm is a well-known neural network example.

At the heart of modern machine learning, Artificial Neural Networks (ANN) or Simulated Neural Networks (SNNs) constitute a vital subset within the realm of machine learning. This is the core of deep learning methodologies. These networks derive their name and architecture from the intricate workings of the human brain, emulating the communication patterns observed among biological neurons.

The Origin of the Rosenblatt Perceptron

In 1957, Franck Rosenblatt introduced a groundbreaking concept at the Cornell Aeronautical Laboratory – the perceptron. This innovation drew heavily from the biological behavior of neurons. Just as a neuron processes signals and either transmits or inhibits them, the perceptron aims to replicate this behavior through mathematical operations.

A neuron gets “messages” from other neurons as electrical impulses of varying strength, which can be either excitatory or inhibitory. It combines these impulses, and if the total is above a specific threshold, the neuron sends out a signal, called an action potential, to other linked neurons.

The Rosenblatt Perceptron functions as a binary single neuron, deftly solving linear classification problems through elementary learning algorithms. Yet, its limitations soon emerged. Non-linear separable problems stumped the perceptron, highlighting its vulnerability. This marks the inception of neural network evolution, poised for a transformative journey.

A Glimpse of the Future of Neural Networks

The Rosenblatt Perceptron remains a cornerstone in the annals of modern neural networks. Despite its humble origins, its influence echoes through the intricate landscape of artificial intelligence. The elegance of its simplicity acted as a catalyst for progress. Fuelling deeper insights into cognition and learning processes, and setting the stage for the evolving neural network saga.

As we retrace the origins of neural networks, we stand on the cusp of unparalleled possibilities. The conquest of non-linear separability and the rise of multi-layer architectures herald the dawn of deep learning. The Rosenblatt Perceptron‘s legacy lives on as a testament to human ingenuity and our relentless pursuit of AI’s limitless potential.

Conclusion

In closing, the journey from the Rosenblatt Perceptron to the cutting-edge neural networks of today exemplifies the fusion of inspiration, innovation, and determination. As we forge ahead, the narrative of neural networks unfolds, driven by the desire to emulate the intricacies of the human mind and redefine the boundaries of artificial intelligence.

Elevate your studies with the unmatched capabilities of Starstim® tES-EEG systems and unravel the mysteries of the mind as never before.