How can AI and EEG interpret our emotions in real-time? In this article, we will delve into how our Enobio headset is being used to read people’s emotions at the #LANUBE{IA} exhibition at CaixaForum Valencia. So get ready to discover the future of neuroscience and AI integration.

Transforming EEG Data into Visuals

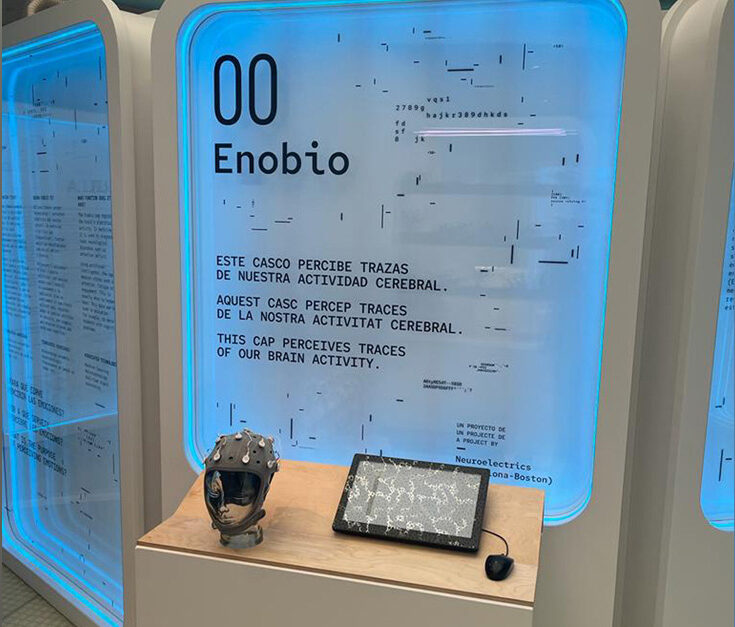

At the exhibition we use an Enobio® head cap with 20 channels to capture the brain signals (EEG, electroencephalography) of the participants while they are experiencing four different stations. Before the beginning of their tour in the exhibition, we record participants’ baseline, i.e., their brain activity at rest, to account only for the affective/cognitive state of the participants relevant to the exhibition.

The EEG signals are filtered and processed in real-time to remove the ocular, muscle, and movement artifacts, as well as the line noise. The EEG bandpower in different frequency-bands is extracted, namely Theta (4-8 Hz), Alpha (8- 13 Hz), Beta (13-30 Hz), and Gamma (30-45 Hz) bands. This information is used to calculate our ExperienceLab features: valence, arousal, engagement, attention, and fatigue in each of the four stations.

Valence is defined in the pleasure-displeasure continuum and ranges from unpleasant to pleasant (Barrett et al., 1999). Whereas arousal is defined as the level of activation and refers to the general level of alertness and wakefulness of a person (Barrett et al., 1999). Valence and arousal represent the two axes of the circumplex model of affect (Russell, 1980), in which according to Russell, all emotions can be represented. Once these variables are extracted continuously over time, they are averaged to correspond to one value per station in the circumplex model of affect, in which valence is the horizontal axis and arousal the vertical axis. Regarding our cognitive variables, these are displayed in the time continuum (every 5 sec) in the form of bars. The average value across all participants that have participated in the exhibition is also visualized as a less opaque point in the circumplex and as a line in the cognitive variables’ representation.

Research and Educational Potential

Education takes center stage in the #LANUBE{IA} exhibition, as it serves as a catalyst for knowledge dissemination. Through the interactive EEG experience, visitors, including teachers, students, families, and researchers, can delve into the intersection of AI and EEG. This hands-on engagement fosters a deeper understanding of the transformative potential of AI in education, research, and the treatment of neurological and psychiatric disorders. Our participation in the exhibition not only aims to educate but also highlights our close collaboration with researchers and clinicians in advancing the field of neuroscience.

The Future of AI and EEG

This experience at CaixaForum offers a glimpse into the future of neuroscience and AI integration. As visitors engage with our Enobio helmet, witnessing the real-time visualization of their emotions, they become immersed in the transformative possibilities that AI and EEG technologies offer. This interactive encounter ignites curiosity, fosters a passion for learning, and paves the way for future breakthroughs in understanding the human mind.

With #LANUBE{IA} exhibition continuing to inspire and educate visitors until July 23, 2023, the presence of EEG technology illuminates the immense potential of AI and EEG in unraveling the mysteries of the human mind. At Neuroelectrics, we invite interested individuals to visit the LANUBE{AI} exhibition and immerse themselves in the captivating capabilities of AI and EEG integration. As the journey continues, the remarkable synergy between AI and EEG holds great promise for improved lives, enhanced well-being, and a deeper understanding of our most complex organ—the human brain.

If you want to develop a project like this, get in touch with our team here: https://www.neuroelectrics.com/contact